The Loop Lab Busan Exhibition is a collaborative citywide event spanning approximately 20 cultural spaces, including public and private museums, alternative spaces, and galleries throughout Busan, Korea.

www.looplabbusan.com/exhibition

www.ocadu.ca/events-and-exhibitions/research-talks-dr-haru-ji

From the author/composer:

"For this album I have assembled a collection of contrasting works from the archives. Some have previously been released, others not. Three pieces: Sculptor, Touche pas, and Bubble chamber are based on the microsound techniques of granular synthesis and micro-montage. By contrast, Modulude, Clang-tint, and Still life were conceived before my microsound period."

"For any given piece, my compositional practice usually takes years. For example, Modulude was initially conceived in 1998 and finished 23 years later. What I call my microsound period began in 1998 and culminated in the book Microsound (The MIT Press) and the album POINT LINE CLOUD (2004), re-issued by the Presto?! label (Milan) in 2019. Sculptor appeared on that album. Clang-tint traces back to 1991. It was finally released in 2021 by the SLOWSCAN label (’s-Hertogenbosch, The Netherlands) in a limited edition LP. Prior to this, the first movement of Clang-tint, Purity, appeared on the album CCMIX Paris (2001 Mode Records, New York). The origins of Still life date back even further, to the 1980s. Touche pas appeared on the DVD FLICKER TONE PULSE (2019 Wergo Schallplatten, Mainz). Bubble chamber is a new release."

ellirecords.bandcamp.com/album/electronic-music-1994-2021

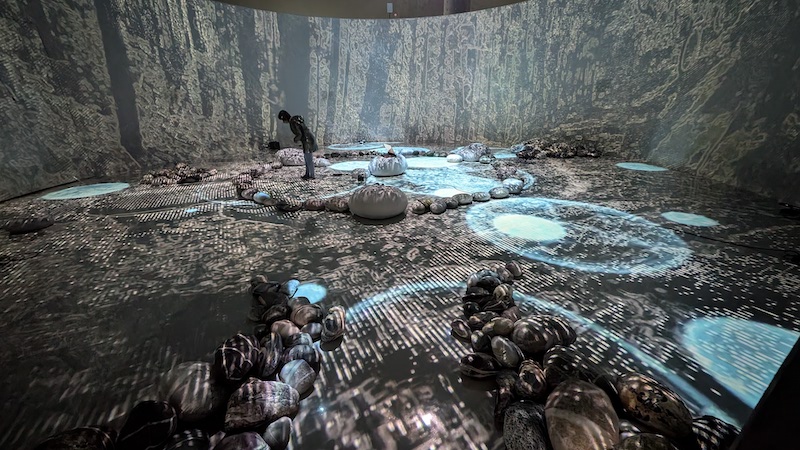

Production Team: Olifa Hsieh (MAT visiting scholar), Timothy Wood (MAT researcher), and Weihao Qiu (MAT PhD student).

The subconscious is where your intrinsic qualities thrive; where seeds of inspiration reside; and where many impulses, emotions, and thoughts are hidden and never expressed. Sometimes they only appear in dreams.

α-Forest is a participatory immersive theater with healing qualities, created by the following three artists: Olifa Ching-Ying Hsieh, Timothy Wood, and Weihao Qiu. The work integrates electronic sound, interactive design, and AI algorithmic imaging technology to capture the audience’s brainwaves (Electroencephalography, EEG) and collect data on their physical movements, resulting in real-time co-created content. At a residency base offered by the Experimental Forest of National Taiwan University, the artists collected unique forest sounds from a mountainous area in central Taiwan, Nantou. They also visited the region’s indigenous tribe and learned about their culture.

More about the exhibition (PDF)

The National Taiwan Museum of Fine Arts

George Legrady: Scratching the Surface. Digital Pictures from the 1980s to Present.

RCM Galerie, Paris

Tuesday, December 17 2024 to Sunday February 16, 2025

32 rue de Lille, 75007

Tue-Fri 2pm-7pm & by appointment

whitehotmagazine.com/articles/32-rue-de-lille-paris/6789

Dr. Rincon is a Post Doctoral Research Fellow at the AlloSphere Research Facility at UC Santa Barbara. His interestes include Architectural Design/Engineering, Computational Design and Fabrication, New Media Architectures and Immersive VR Environments.

https://leonardo.info/blog/2025/01/21/recognition-of-leonardos-outstanding-peer-reviewers

As part of the research team, they wrote the software and created the generative and AI content for the study: "Audio-Visual System to Mitigate the Negative Effects on Stress and Depression in Confined Spaces and Extreme Environments."

The research field relates to neuro-architecture and focuses on Media and Design Interventions in Isolated and Extreme Environments (ICEs), utilizing generative design tools and Artificial Intelligence tools to create virtual environments that aim to reduce the negative psychological impacts of long-term isolation.

Leading the Greek expedition, Architect-Engineers Christina Balomenaki and Efharis Gourounti have made history as the first Greek women researchers to set foot in Antarctica. The research conducted during this mission will contribute to the scientific understanding of human adaptation in extreme environments and strengthen Greece's position in international polar research.

The project is jointly led by Professor Konstantinos-Alketas Oungrinis, Vice-Rector for Research and Innovation; Marianthi Liapi, Research Program Director at the Transformable Intelligent Environments Laboratory (TUC TIE Lab); and Professor Michael Zervakis, Rector of the Technical University of Crete and Director of the DISPLAY Lab at the School of Electrical and Computer Engineering.

www.eurecapro.eu/tuc-scientific-expedition-in-antarctica

Weihao is currently a PhD candidate in the Media Arts and Technology (MAT) program, where his research is centered on improving the modularity, customizability, and interactivity of Generative AI tools. His primary goal is to enhance the diversity, expressiveness, and audience connectedness of AI-based artworks. Weihao's research contributions have been published in conferences, such as Neural Information Processing Systems (NeurIPS), The ACM Conference on Human Factors in Computing Systems (CHI), and The International Conference on Robotics and Automation (ICRA). His artwork has been exhibited in venues such as Beijing Times Art Museum, FeraFile, The Wolf Museum of Exploration + Innovation (MOXI), SIGGRAPH, and UCSB MAT End of Year Shows.

The intent of the fellowship is to provide graduate students who are passionate about exploring the intersection of multiple fields related to the initiative with the opportunity to participate in research projects and activities organized as part of the annual summit.

Mellichamp Initiative in Mind & Machine Intelligence Summit 2024

ACM SIGGRAPH is the premier conference and exhibition on computer graphics and interactive techniques. This year they celebrate their 50th conference and reflect on half a century of discovery and advancement while charting a course for the bold and limitless future ahead.

Burbano is a native of Pasto, Colombia and an associate professor in Universidad de los Andes’s School of Architecture and Design. As a contributor to the conference, Burbano has presented research within the Art Papers program (in 2017), and as a volunteer, has served on the SIGGRAPH 2018, 2020, and 2021 conference committees. Most recently, Burbano served as the first-ever chair of the Retrospective Program in 2021, which honored the history of computer graphics and interactive techniques. Andres received his PhD from Media Arts and Technology in 2013.

Read more from the ACM SIGGRAPH's website and this article on the ACMSIGGRAPH Blog.

The next ACM SIGGRAPH conference is in August 2023 and will be held in Los Angeles, California s2023.siggraph.org.

This SPARKS session focuses on the innovative interactive digital artwork and pioneering artists prior to the year 2000. Interactive digital art’s roots began forming in the 1960s and blossomed in the following decades. By relinquishing the power to control the outcome of a work of art, digital artists in the 1960-1990s established a democratic, reciprocal relationship with the viewer. Without a defined history, artists were free to experiment and create works that capitalized on the concept of “possibilities”. These individualized personal art experiences took many forms including screen-based art, immersive installation environments, haptic device art, and much more.

https://dac.siggraph.org/sparks/feb-2024-pioneering-interactive-art-and-artists

This event is co-moderated by MAT alumni Dr. Myungin Lee and co-sponsored by the ACM SIGGRAPH History Committee.