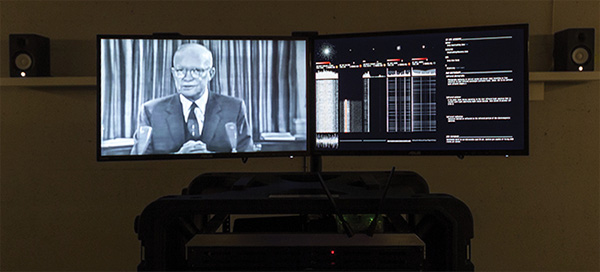

"Only an alert and knowledgeable citizenry can compel the proper meshing of the huge industrial and military machinery of defense with our peaceful methods and goals, so that security and liberty may prosper together" - Dwight David "Ike" Eisenhower, January 1961.

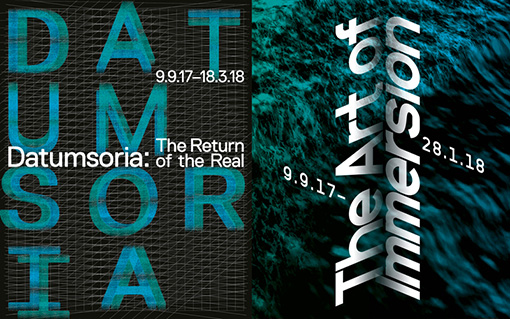

Electromagnetic forces can be shaped, modulated, monitored or transformed in order for them to be utilized. The FRAGILE SAFARI situations are constituted as a ‘parcours’ through two bodies of work. The first work is the evolved signals intelligence (SIGINT) work: We Should Take Nothing for Granted – On The Building of An Alert And Knowledgeable Citizenry the second is the spatial electromagnetic installation STAR VALLEY (ICARUS).

Together, the works provoke a sensorial experience of the immaterial electromagnetic spectrum, and open well-defined societal questions regarding notions of privacy in the 21st century. Collectively, the elements form a tactical media landscape that is conceived as an “electromagnetic theatre” in order to engage the visitors/participants on multiple levels; technologically, intuitively, intellectually and politically. Arguably the electromagnetic spectrum is the most valuable, yet non-exhaustable natural resource, while it’s control and use continues to be strategically significant and economically vital.

Central to both works are probes, the devices that are probing multiple aspects of the electromagnetic spectrum by monitoring or transmitting signals in order to observe or occupy the spectrum. These acts activate civic potentials to engage and re-imagine the relationship between the global citizenry and sovereign actors with the military industrial complexes including their visible, opaque and dark structures by addressing current positions and debates about the notions and structuring of privacy, surveillance states, and safetylss. Each element in FRAGILE SAFARI is the result of research and construction of hardware and software systems nto be utilized as a set of tools, in order to gain knowledge of the occupation, use and potential misuse of global mass communication infrastructures.

STAR VALLEY (ICARUS), is a single spark-gap transmitter lthat occupies and overwhelms local signals . The transmitter is controlled by a computer running a neural network that has been trained on US/NATO codenames, describing units, orders of battle and/or military operations and their descriptions in order to generate new names, theatres, and directives of ‘imaginary’ operations both from the past and projected into the future, highlighting the intentional obfuscation of facts from the public.

Lastly, We Should Take Nothing for Granted – On The Building of An Alert And Knowledgeable Citizenry, is designed to demonstrate the fragility of the local communications infrastructure by probing the visitor’s personal communication devices while creating a generative sonic landscape of over 20 years of collective signal monitoring archives using Eisenhower’s presidential farewell address from 1961 as a compositional tool.

The title for the series FRAGILE SAFARI is derived from another “safari”, the BIG SAFARI, which is a specialized black USAF program dedicated to air systems modification for collection and processing of SIGINT (signals intelligence) and COMINT (communications intelligence) data, as well as offensive and defensive electronic warfare. The program is one of the oldest electronic warfare related programs and responsible for much of the SIGINT collection globally since 1952.

The works on display are small part of the evolutionary toolkit that Biederman and Peljhan have been creating over the past 20 years in order to re-examine and redefine our relationship to the political, philosophical and physical conditions of the Electromagnetic Spectrum.

www.pavedarts.ca/2018/matthew-biederman-marko-peljhan-fragile-safari

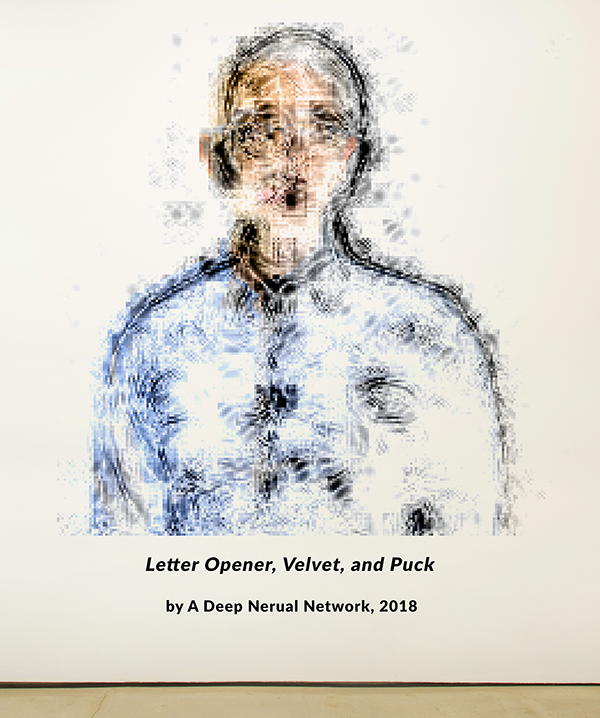

In collaboration with Sam Green, a UCSB Computer Science PhD. candidate, Jieliang Luo presented "Human Portrait Decomposition" at IEEE VIS 2018 Art Program, which took place in Berlin, Germany from October 23rd to 25th. Decomposition of Human Portraits aims to evoke awareness of the fragility of our digital identities managed under intelligent machines, by presenting how a solid and well-trained system may falsely interpret human portraits. The project is under the supervision of professor George Legrady, with contributions from MAT Masters student Lu Liu (UX design).

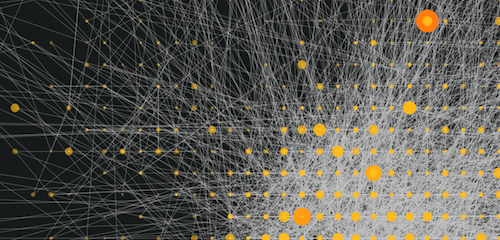

This paper presents BeHAVE, a web-based audiovisual piece that explores a way to represent personal behavioral data in a multimodal approach, by visualizing and sonifying this data based on the form of a heatmap visualization. As a way of typical visualization, it shows the location and time records of the author’s mobile phone use as clustered circles on an interactive map. In order to explore all of the data sequentially in a short period, it also transforms a year of data into sound and visuals based on a microsound timescale. By suggesting this multimodal data representation as a means of revealing one’s personality or behavior in an audiovisual form, BeHAVE attempts not only to improve the perception and understanding of self-tracking data but also to arouse aesthetic enjoyment.

Brief Description

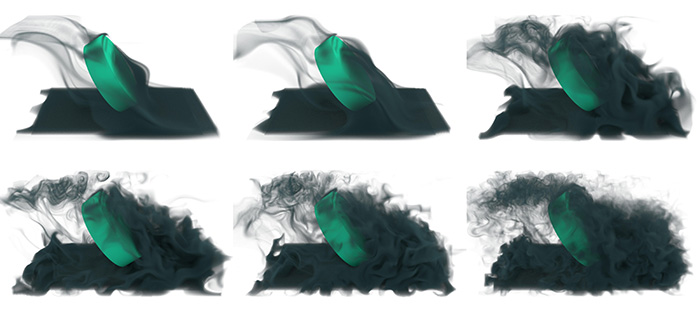

We show how to make Laplacian Eigenfunctions for fluid simulation faster, more memory efficient, and more general. We surpass the scalability of the original algorithm by two orders of magnitude.

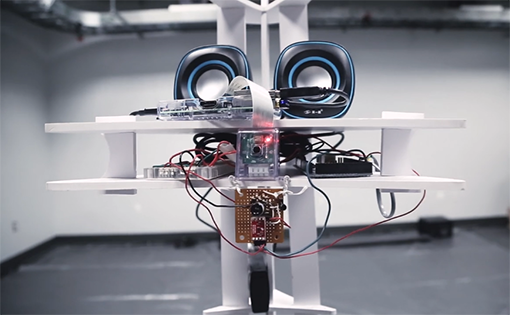

With "HIVE" we intend to explore the idea of a sonic intelligence: learning, experiencing, reacting, and finally, “thinking” in sound. Can we model such a system? A system with a body whose morphology is based on picking up and sending sound signals, a system who can learn from its environment and evolve in its response, a pseudo ‘being’ that traces our sonic foot-print and projects our sonic reflection.

Created by fusing aspects of sculptural form, spatial sound, and interactive methods, "HIVE" explores the relationship between sound, space, body, and communication. "HIVE" was produced in 2016 by Sölen Kiratli and Akshay Cadambi and debuted in Santa Barbara Center for Art, Science, and Technology (SBCAST) in December of 2016. It was exhibited at ACM SIGGRAPH Asia 2017, in Bangkok, Thailand, November 28 through 31. More info at solenk.net/HIVE.php

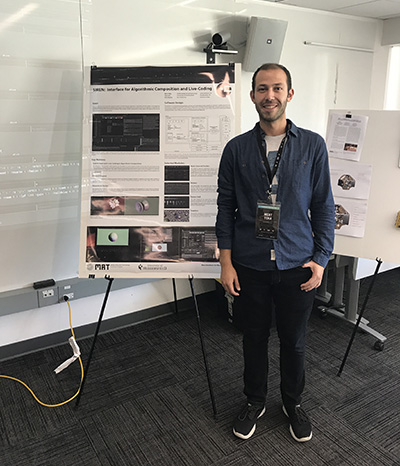

Siren is a software environment for exploring rhythm and time through the lenses of algorithmic composition and live-coding. It leverages the virtually unlimited creative potential of the algorithms by treating code snippets as the building blocks for audiovisual playback and synthesis. Employing the textual paradigm of programming as the software primitive allows the execution of patterns that would be either impossible or too laborious to create manually.

The system is designed to operate in a general-purpose manner by allowing multiple compilers to operate at the same time. Currently, it accommodates SuperCollider and TidalCycles as its primary programming languages due to their stable real-time audio generation and event dispatching capabilities.

Harnessing the complexity of the textual representation (i.e. code) might be cognitively challenging in an interactive real-time application. Siren tackles this by adopting a hybrid approach between the textual and visual paradigms. Its front-end interface is armed with various structural and visual components to organize, control and monitor the textual building blocks: Its multi-channel tracker acts as a temporal canvas for organizing scenes, on which the code snippets could be parameterized and executed. It is built on a hierarchical structure that eases the control of complex phrases by propagating small modifications to lower levels with minimal effort for dramatic changes in the audiovisual output. It provides multiple tools for monitoring the current audio playback such as a piano-roll inspired visualization and history components.

"Mandala" by Jiayue Cecilia Wu

The event this year was held from March 29-31 at the University of Oregon School of Music and Dance in Eugene Oregon.

SEAMUS 2018 at the University of Oregon

The piece will also be performed at Stanford University and Mills College during the California Electronic Music Exchange, April 5-7, 2018.

The exhibition consists of a wall of 180 photographs organized in 20 thematic clusters of images showing the way of life in 1973 in four James Bay Cree villages in the Canadian sub-arctic. The exhibition also includes 2 large screens featuring video documentation by Andres Burbano of village scenes recorded during two return trips in 2012 and 2014.

In 1973, the Cree invited professor Legrady to photo document their daily life, as a way to strengthen their negotiations with the Canadian government over land rights. At the time, the Québec government had plans for a hydroelectric project that would flood a significant area of Cree land in the James Bay. Although the project went forward, the Cree were able to leverage the issue and negotiate self-governance, improving their political and social position within Québec.

A panel discussion will be held on Thursday, January 18, at 4pm, in room 1312 of the UCSB Library, followed by a reception and exhibition walk-throughs.

Hannen Wolfe's robot "ROVER", studies the nature of human-robot interactions.

The exhibition will be on display from September 2017 until March 2018 at the Zentrum für Kunst und Medien (Center for Art and Media), one of Europe’s most important digital media arts museums.

"Voice of Sisyphus" consists of a large projection of a black and white photograph taken at a formal ball, an image reminiscent of the staging of the Alain Resnais film "Last Year in Marienbad". Custom software was developed that unfolds in 8 audio-visual phases, each with a specific set of image segmentation, filtering, and animation, translating the pixel data into a continuous 4 channel sonic experience distributed through the four corners of the exhibition space.

Production credits include: George Legrady (concept and project development), Ryan McGee (image analysis, sound synthesis and spatialization software), and Joshua Dickinson (audio-visual composition software).

She will present a talk titled "Promoting Underrepresented Cultures through Multimedia Arts Collaboration" at the student fellows symposium "Role/Play: Collaborative Creativity and Creative Collaborations", at the National Academy of Sciences, Washington DC, March 12-14, 2018.

S. Patwardhan, A. Kawazoe, D. Kerr, M. Nakatani, Y. Visell, Too hot, too fast! Using the thermal grill illusion to explore dynamic thermal perception. Proc. IEEE Haptics Symposium, 2018.

J. Jiao, Y. Zhang, D. Wang, Y. Visell, D. Cao, X. Guo, X. Sun, Data-Driven Rendering of Fabric Textures on Electrostatic Tactile Displays. Proc. IEEE Haptics Symposium, 2018.

B. Dandu, I. Kuling, Y. Visell, Where Are My Fingers? Assessing Multi-Digit Proprioceptive Localization. Proc. IEEE Haptics Symposium, 2018.

J. van der Lagemaat, I. Kuling, Y. Visell, Tactile Distances Are Greatly Underestimated in Perception and Motor Reproduction. Proc. IEEE Haptics Symposium, 2018.

M. A. Janko, Z. Zhao, M. Kam, Y. Visell, A partial contact frictional force model for finger-surface interactions. Proc. IEEE Haptics Symposium, 2018.